In part I of this article, I covered the need for prompt engineering and some basic techniques of prompt engineering, viz., simplicity, clarity, conciseness, prompt format, and context. In part II, we focused on a few more techniques like drawing clear boundaries, defining output format, defining a persona, and the importance of punctuation. Let’s look at a few more techniques. But before that, if you were wondering early morning on 28th June as to what went wrong with all the OpenAI prompts that you had written earlier… “has the turbo-3.5 model gone crazy?” No… it’s just got upgraded ?! So, it will not produce a similar output even at 0oC (I meant temperature!). A quick observation is that the model talks too much now. If you want the older result, you must use the snapshot model 0301 instead of the latest one. 0301 models will vanish on 13th September. So, hurry up and reengineer all your prompts to make it work with the latest model. As Always, happy prompting ?. Okay, let’s continue…

At times, it’s best to talk less (did I hear you saying, “all times?”)

Let’s go back to our earlier example, where we gave a poem extract and asked for a few details in the prompt. The same can be accomplished by providing a skeleton as the prompt and leaving AI to add the skin.

AI will try to “fill in the blanks.” The point to note here is, it’s not always about providing descriptive instructions. It’s all about clarity. Also, I’ve intentionally given a JSON structure just to illustrate how we can directly consume the output in the calling application.

In-Context Learning:

Before moving to the next technique, let’s understand what In-Context Learning is. Traditionally, training a deep learning model involves providing it with training data and coming up with weights that can best generalize the data provided. Fine-tuning these models involves adjusting these weights to fit them for specific problems. The data needed is relatively high. However, in In-Context Learning, we provide an LLM with one or more examples so that it sort of “learns” from the example and completes the task. I say “sort of learns” because there is no change in the weights. In other words, it’s not actually learning anything new but is able to figure out the concept involved in the task based on the example and perform the task. If you want to know more about this, here is a blog by Stanford AI lab.

Depending on the number of examples given in the prompt, we call it zero-shot, one-shot, or few-shot prompt/training where “shot” refers to the (problem, solution) pair.

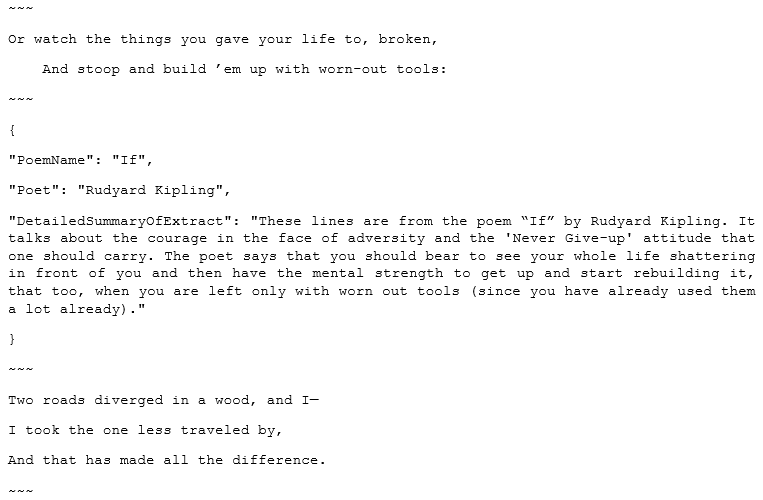

Now, let’s come back to the technique part. Let’s say you gave the previous prompt but were not very satisfied with the AI’s response, especially in the summary of the extract part. Here is how you can hint the AI with a one-shot prompt.

The above is an example of a one-shot prompt. It shows AI how to perform the task using one example, and AI takes care of the rest. This technique is good for steering AI to produce results in a specific style, tone, format, etc. Ultimately, it enhances specificity. It is also useful to demonstrate complex tasks instead of creating instructions on how to do them. There are scenarios where you may have to provide multiple examples, and it becomes a few-shot prompt.

“Byte” what AI can chew – divide into smaller chunks:

There are limits to the number of characters your prompt can contain depending on the model used. In the case of OpenAI’s GPT-3 DaVinci models, its 4000 tokens, including prompt & AI’s response (recall, tokens are a group of characters; here ~4 characters = 1 token). In the case of GPT-4, this touched 32K. But why this restriction? Because there is a limit to the number of characters, an LLM can “comprehend.” So this is referred to as the “context window” in the LLM world. Say, you want to process some content (e.g., summarize). You need to give the whole content as part of your prompt and then the instructions to summarize it. But if the content runs to several pages and exceeds the context window, getting the summary in one go is impossible. In such cases, you can obviously split the content into several parts within the context window and process it. But there is an issue. The output may vary depending on where you cut the split. If the input can be logically split by headings, great… but not always this is possible – for e.g., a JSON that describes something or a transcript of a discussion. In such cases, auto-fixing the point of the split could be challenging. This is, unfortunately, a hyperparameter (in the world of AI/ML, any unknown value is conveniently a “hyperparameter” :-). The solution is to experiment with multiple samples and fix the split that best suits your use case.

Chain Rule of prompt engineering (not calculus, thankfully!)

Imagine you have a very complex task for AI. It may not be easy either for you to create an effective “monolithic” prompt that clearly articulates what is expected or for the AI to understand the prompt and perform the task. However, in some cases, the task can be divided into parts in such a way that the output of the previous step becomes the input of the next. In these cases, you can create a workflow consisting of several steps, write a prompt for each step, and chain them all together using the outputs of the previous step as the input of the next. Say, for example, your use case involves decision-making, reasoning, formatting, and summarization. I’d write a separate prompt for decision-making & reasoning and another prompt to extract relevant information from the previous output and summarize it in the required format. This is a very powerful technique – like the chain rule in calculus – though it may sound very simple.

I think we have “slightly” exceeded the 1000 words context window permitted for this article?. So, let me wrap it up here for this post. Until I see you again, Keep Prompting!