Digital disruption has taken over the world at an exponential pace. To acclimatize to these digital realities, enterprises are proactively moving towards digital adoption. Their interest in AI and ML is approaching an unprecedented and favorable pitch. In a survey conducted by the analyst firm Cognilytica on global AI adoption, 90% of respondents said they plan to implement one of the AI patterns in the short term, if not already. Although AI and ML are the trendsetters, AutoML, with its power to perform ETL tasks, data pre-processing, and transformation, became popular by the end of 2020. The existing ML-based solutions require manual efforts in tasks like data preparations, model construction, algorithm selection, hyperparameters tuning and compression, etc., with ultimate enquires. According to a report by EMC, the world is witnessing a data explosion, and the data production will multiply tenfold from 4.4 trillion gigabytes to 44 trillion gigabytes in the near future. The existing ML solutions may be inadequate to process this increasing amount of data. Here’s why AutoML is getting popular and being widely used.

AutoML- The new age ML

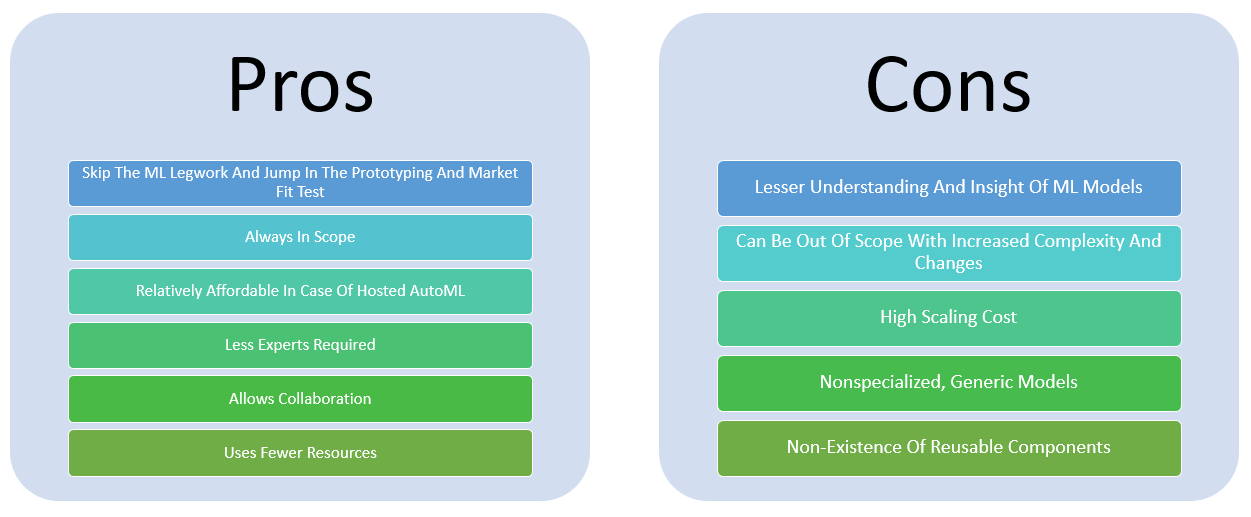

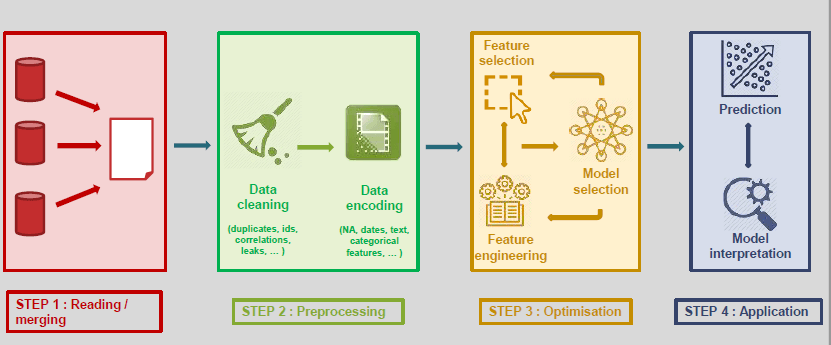

As stated, AutoML (Automated Machine Learning) tools and platforms have emerged in the market to help solve the dilemma of an insufficient workforce for data science solutions and help business analysts build predictive models with minimal struggle.

The robust automation in AutoML helps bridge the skill gap by empowering even non-tech companies or non-experts to smoothly use machine learning models and techniques. AutoML makes the fruits of Machine Learning models available to all in a swift manner.

With advanced diagnostic analytics or predictive and prescriptive capabilities, AutoML has created a new role called the – CITIZEN DATA SCIENTIST.

The Evolution from Programming Languages to AutoML

Programming languages R and Python got everyone to adopt data science as a mainstream practice, unlike other platforms like SAS that are still widely present. Yet, these programming languages require a deeper algorithm understanding to tune them effectively.

A shift from programming languages to data mining platforms like Rapid Miner, Knime and SPSS allowed modeling without code because of the drag and drop interfaces. Contrastingly, they were run with data sampling and had limitations while handling an immense volume of data. Additionally, these platforms required someone to choose the appropriate models and create the right model ensembles.

Cloud Hyperscalers like Google, Microsoft and Amazon, delivered on-cloud ML services to handle an immense volume of data and extended it with AutoML capability. Google AutoML, Azure ML and Sage Maker Cloud ML offer Machine Learning as a Service which still needs coding. Their functionalities extend to data preparation (by data labeling or notebooks), feature engineering, model selection and learning. These ML products allow parameter specification* from Client APIs and are big on scalability in the cloud.

(*Sage Maker allows for hyperparameter optimization)

Now we have dedicated AutoML platforms like Datarobot Dataiku, H2O.ai that also bring in the aspects of a community working together and MLOps. These focus on fast model development while offering end-to-end solutions, health checks and versatility in ML problems solved.

What More Can AutoML Do for Your Business?

- AutoML fulfills one of the ultimate goals of a business. It helps them achieve a quick time-to-market.

- Artificial Intelligence and Machine Learning curb companies to make the most of them as they fall under a high entry barrier field that requires sophisticated expertise and resources that cannot be afforded by many companies on their own today. AutoML breaks that barrier by democratizing the fruits of AI/ML for all, making it accessible to every business!

The automated component helps swiftly start the ML journey from scratch and allows scaling up or down the lane economically. It is also more accurate (lesser human bias/subjectivity) and focused on business problems than modeling. Thus, it renders itself independent of the trouble of recruiting and retaining ‘Data/ML Experts’ – a relatively rare and expensive profile at exorbitant costs. Need more assurance? Well, Gartner claims that more than 40% of data science will be automized. So, it is almost certain that AutoML is the perfect answer to develop our enterprise and AI/ML capabilities in a hassle-free and speedy environment.

How Does AutoML Work?

Working with AutoML is fuss-free. It is as easy as feeding in all our data and getting out the final model and predictions. That’s it!

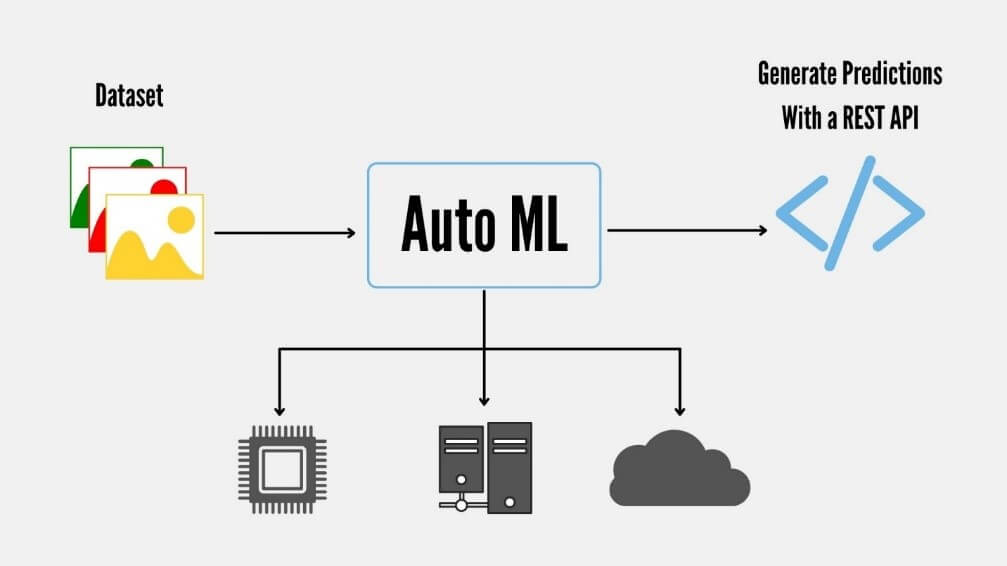

Going a little deeper (without getting too technical), a typical Machine Learning model consists of the following four processes:

Right from ingesting data to pre-processing, optimizing, and predicting its outcomes, every step is controlled and performed by people. AutoML essentially focuses on two prime aspects that need to be worked on by the human resource — data acquisition/collection and prediction. All the other steps that take place in between are automated while delivering a model that is well optimized and prediction-ready. This end-to-end automation of the entire process can make the whole ML experience completely hassle-free.

Adding to this, it also takes care of the complicated and subjective steps like Hyperparameter Optimization, Feature Engineering (Feature Generation + Selection), Model Interpretability, etc. – which undoubtedly makes it an extremely helpful tool even for the ML experts! (More on this in subsequent blogs). Thus, it can be rightly concluded that AutoML can smartly work for you in multiple ways!

How to Get Started in AutoML?

Businesses and their growth are measured by metrics spread on a dashboard. Retail-based organizations have a customer churn metric while, insurance-based organizations have a delinquency metric. Every organization across any industry collects data to build a better customer profile and improve its market understanding.

Moving these metrics to the next level of prediction and understanding the intricate patterns veiled in the metrics will certainly enable a new perspective. Hexaware offers to deep dive into such metrics and datasets to generate insights by identifying patterns and building classification, prediction and regression models.

Some AutoML platforms that are leveraged by Hexaware customers include:

- DataRobot

- H2O.io

- Dataiku

- AWS – Amazon Sage Maker Autopilot

- Microsoft – Microsoft Azure AutoML

- GCP – Google Cloud AutoML