Well, what if I told you that generative AI never answers your questions, but you feel it is doing so? OK, here is what happens under the hoods of generative AI… when you type in a question, it considers it as a sequence of characters and just tries to continue (ok, “predict”) the sequence with a set of characters1 that logically fit the sequence (like you continuing a number sequence in an aptitude test). This is possible because AI has learned almost all the possible word patterns, thanks to the internet (hyperbole?). So why does it provide the answer to your question, then? Because the answer is the most appropriate word sequence to follow a question. This is an overly simplified explanation to digest the concept2. Moral of the story –

- Your input to AI doesn’t necessarily have to be a question but that “magic sequence” that AI continues to produce what you want as the response. That magic sequence is called a “Prompt” – remember your school theatre performance where the teacher was “prompting” you the dialogue offstage ??

- Obviously, AI’s response can only be as good as the prompt (and the prompter).

It means there is a need for a “process of crafting high-quality prompts that effectively elicit the desired response from the AI system”3 – Prompt Engineering. Now that we have a fundamental idea, let us look at some techniques that can help you in your journey as a “Prompt Engineer”. The intent is not to create a manual but to provide some hints… and I’ll do it in several parts. This way, you spend less time reading!

Keep it simple, clear & concise but descriptive when needed:

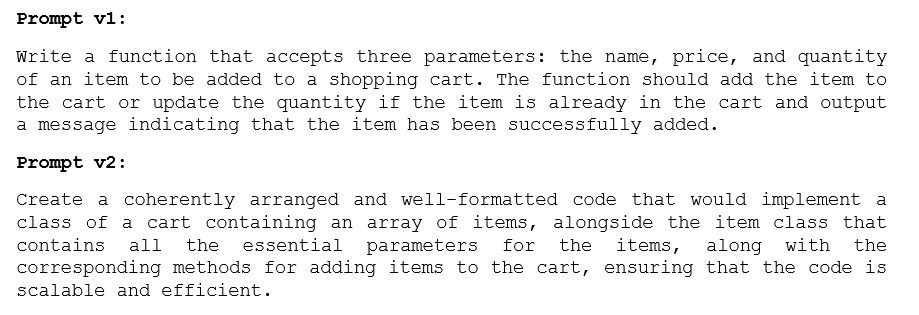

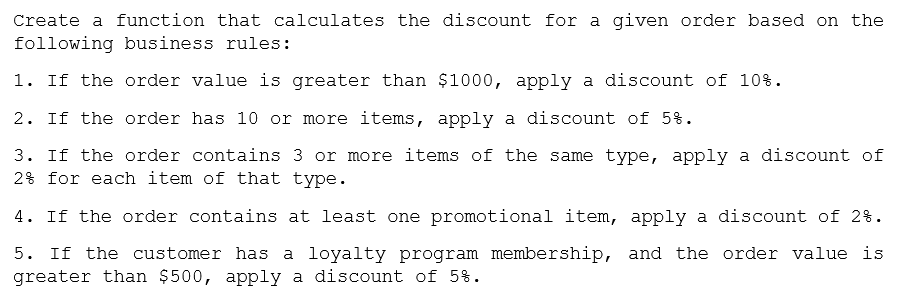

Describe what you need in simple sentences and build over it. Say, you want to generate a function to add items to the cart. The prompt can look like either of the ones shown below:

The first prompt is simple, “AI-friendly,” and human-friendly. It states the input and output clearly. The validation/logic is concise yet descriptive to the extent needed. On the other hand, the second prompt is needlessly complex and is unclear about the input & output.

I know this is not earth-shattering – but the most obvious is the one that is often missed!

Choose the correct format to represent the input information:

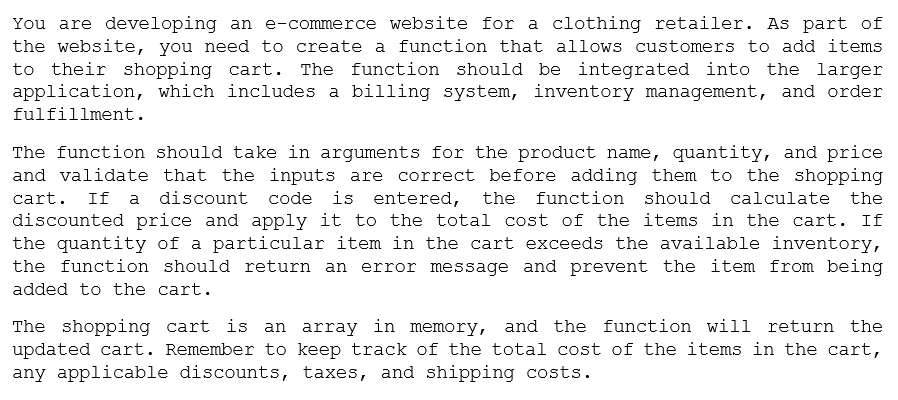

Describing a problem that consists of multiple/complex rules/logic in simple sentences can be tricky. Expressing them as a numbered list can make the prompt effective, as it provides a structured framework for AI to understand the prompt and produce the desired output. Here is an example of a prompt that calculates the discount based on some business rules.

You can see how breaking a paragraph into a numbered list improves the clarity of the prompt. It also helps in easily validating it at a later point and making changes with time. Other representations, like JSON, tables, etc., can also be used. The key is choosing the appropriate representation that articulates the inputs with utmost clarity and is AI-friendly.

Context lights the room:

One of the most important aspects of an effective prompt is how much context is provided. Context helps AI generate accurate responses that best fit a specific scenario (specificity). A prompt that lacks context becomes very generic and gives room for ambiguity. So, its response could be varied and may not be apt, while those with context give AI clarity on the expected output. For example, mentioning who will be the target audience is an important context while generating content. Similarly, while generating code, the prompt could include information about the other modules, the larger application where it will be integrated, etc. Here is an example:

As you can see, it has 3 sections – the first that gives enough context, the second that explains the input & logic, and the third that describes the desired output. The same prompt can also be written with the logic represented as a numbered list.

Let’s stop it here for today. I’ll continue this with more hints soon… until then, Keep Prompting ?!

P.S.: All the example prompts are AI-generated – of course, I wrote the “prompt (prompts).” Though sanity-checked, these are still samples to illustrate the concept.

1 – Tokens, to be exact. Tokens are 1 or more characters.

2 – I’ve kept it intentionally simple. Concepts like Instruction Tuned LLMs and RLHF are for the geeks to explore further.

3 – “Prompt Engineering” as defined by GPT 3.5