Executive Summary

Generative AI is revolutionizing industries, but it has a major limitation—relying on static data that quickly becomes outdated. Amazon Bedrock Retrieval-Augmented Generation (RAG) solves this problem by integrating real-time information retrieval with generative AI, ensuring responses are not only contextually accurate but also factually up to date. Amazon Bedrock’s managed RAG platform makes it easy for businesses to harness this power, offering automated, scalable solutions with enhanced relevance and real-time content generation. From legal research to customer support, AWS Bedrock RAG is set to transform how businesses deliver precise, timely, and dynamic AI-driven solutions.

The Evolution of Generative AI (GenAI)

Generative AI (GenAI) has transformed the way we interact with technology, allowing machines to produce human-like text, images, and audio. These models, trained on vast datasets, excel at generating contextually relevant responses, making them invaluable in areas like customer support, content creation, and more. However, traditional GenAI models are inherently limited by their dependence on static training data. This means they struggle to provide accurate answers when handling rapidly changing information or responding to highly specific queries.

For example, a GenAI chatbot may be unable to accurately answer questions about a recent product update or legal change without being retrained on that information. This gap highlights the need for a more adaptive approach—one that allows AI to access real-time, accurate information as needed.

How is RAG GenAI a Game Changer?

Retrieval-Augmented Generation (RAG) is a breakthrough that overcomes the limitations of traditional GenAI by combining it with retrieval capabilities. Instead of relying solely on pre-existing knowledge, RAG-enabled models can access external sources, such as databases and knowledge bases, to retrieve relevant, up-to-date information dynamically. This allows RAG models to generate responses that are not only contextually accurate but also factually current.

For example, a RAG-powered customer service bot can access specific product details or policy changes stored in an external knowledge base, providing accurate responses without needing to be retrained. By bridging the gap between generative capabilities and real-time information retrieval, RAG offers a solution that traditional GenAI models cannot match, particularly in fields where accuracy and relevance are critical.

The Role of Knowledge Bases in RAG

A knowledge base is a structured repository of information, containing documents, facts, and other resources that can be accessed to provide real-time, accurate responses. Knowledge bases are foundational to RAG systems, allowing models to retrieve highly relevant, context-specific data when generating responses. For instance, in a customer support scenario, a knowledge base can store product information, troubleshooting guides, and policy details, all of which can be pulled by a RAG model to enhance the accuracy of responses. This makes knowledge bases essential for applications that require on-demand information retrieval, as they ensure that responses are not only informed but also up-to-date and relevant to the user’s needs.

The Magic Behind Amazon Bedrock’s RAG: How It All Works and Its Key Features

Amazon Bedrock is a managed platform designed to help businesses deploy and scale RAG solutions effortlessly. By offering a robust infrastructure, Bedrock simplifies the complexities of retrieval and data integration required for RAG, enabling companies to harness the full potential of real-time information without extensive backend work.

Key Features

- Managed Retrieval Pipeline: AWS RAG on Bedrock provides an automated end-to-end retrieval pipeline, reducing manual tasks like query formation and data retrieval, letting developers focus on deployment without needing backend setup expertise.

- Enhanced Relevance with Embedding Models: Using embedding models, RAG delivers contextually rich, intent-aligned data retrieval, moving beyond keyword searches for responses that accurately reflect user needs.

- Security and Scalability with Serverless Architecture: RAG’s serverless design allows seamless scaling with robust security protocols, ensuring cost efficiency and data protection.

- Real-Time and Dynamic Content Generation: RAG enables dynamic content generation, ideal for applications like customer service and news, where real-time, accurate responses are critical.

- Cost Efficiency: RAG’s serverless infrastructure dynamically scales with demand, reducing infrastructure management costs.

A Case in Point: GenAI for the Legal Industry

The legal industry is undergoing a significant transformation with the increasing demand for efficient, accurate, and scalable solutions. Traditionally, legal professionals spend extensive time and resources on repetitive, labor-intensive tasks such as case research, citation management, and document review. These manual processes are not only time-consuming but also subject to errors, increasing operational costs and delaying litigation processes.

Generative AI (GenAI) provides a solution by automating routine tasks, enabling lawyers and paralegals to focus on strategic decision-making. However, traditional GenAI models are limited in handling domain-specific requirements and real-time legal data retrieval, making them unsuitable for legal use cases that demand precision and up-to-date references. This is where Retrieval-Augmented Generation (RAG) steps in to bridge the gap, offering tailored, dynamic, and accurate AI-powered assistance to meet the unique demands of the legal domain.

Real-World Use Cases of RAG on Bedrock in Legal Industry

- Contract Analysis and Risk Assessment

RAG on Bedrock can assist legal teams by analyzing lengthy contracts and identifying potential risks or clauses that require attention. By retrieving relevant case law and precedents from a knowledge base, it helps lawyers assess risks and ensure compliance with industry standards more efficiently, reducing manual review time.

- Legal Compliance Monitoring

With RAG, firms can stay updated on regulatory changes across multiple jurisdictions. By continuously pulling information from databases on new laws, guidelines, or compliance requirements, RAG-enabled solutions can help legal professionals quickly assess how these changes impact their clients, providing proactive guidance and ensuring adherence to current regulations.

- Legal Case Summarization & Citation

In the legal field, professionals often dedicate significant time to summarizing cases and managing citations. RAG on Bedrock streamlines this process by automatically generating concise summaries and retrieving accurate citations from a comprehensive knowledge base. This capability not only enhances efficiency but also ensures that legal practitioners have quick access to relevant case information, allowing them to focus on building stronger arguments and strategies.

Hexaware’s RAG-Powered Legal Assistant Proof-of-Concept (POC)

Problem Statement:

Legal professionals often spend a significant amount of time on routine research and documentation. Our goal was to see if we could automate some of these tasks, allowing lawyers to focus on more strategic work.

Solution:

Our team developed a proof of concept (POC) that leverages RAG on Bedrock to assist with legal research and documentation tasks. This POC aims to automate routine legal tasks using an LLM-powered legal assistant built on Amazon Bedrock. By leveraging a Retrieval-Augmented Generation (RAG) approach with Pinecone, the solution enables efficient legal research, document summarization, and case citation retrieval. This allows lawyers to save time on tedious tasks and focus on more complex and productive activities.

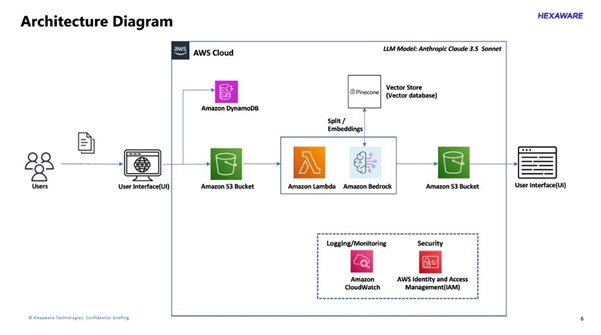

Architecture Diagram:

The architecture integrates multiple AWS services to deliver an end-to-end solution for analyzing legal documents and conducting relevant legal research, accessible through a user-friendly UI.

Process Flow:

- Document Upload and Processing: Users upload documents to an Amazon S3 bucket via a user-friendly interface. Amazon DynamoDB stores login information.

- Data Preprocessing and Embedding Generation: A Lambda function processes the documents, creating embeddings to help the model understand the content semantically.

- RAG Retrieval and Analysis: Amazon Bedrock, integrated with Pinecone and Anthropic’s Claude, retrieves case citations and other relevant legal information from a knowledge base. This ensures precise and relevant responses.

- Output and Monitoring: Processed results, like document summaries and case references, are stored in S3 and accessible through the UI. CloudWatch monitors system health, and IAM manages secure access.

Outcome:

This POC demonstrated the potential for RAG to reduce time spent on legal research, providing timely and accurate information while enabling lawyers to focus on high-value activities.

USPs of our RAG-Powered Solution on Amazon Bedrock

- Enhanced Legal Strategy:

- Rapidly identifies pertinent cases to strengthen arguments and legal strategies.

- Tailored search capabilities provide jurisdiction-specific results for increased relevance.

- Significant Time and Cost Savings:

- Reduces legal research time by 90%.

- Cuts litigation preparation costs by automating citation generation and document analysis.

- Customizable and Scalable:

- Offers flexible search parameters to adapt to varying legal contexts.

- Scales seamlessly to meet the demands of large legal firms and enterprises.

- Ease of Use:

- Features intuitive interfaces, making it accessible to attorneys, paralegals, and research analysts with minimal training.

- Integrates seamlessly with existing legal tech stacks for smooth workflows.

- Accuracy and Risk Mitigation:

- Ensures citation compliance with prescribed formats like Bluebook or custom requirements.

- Mitigates risks by providing exhaustive coverage of relevant case laws, reducing chances of oversight.

Conclusion

As AI technology advances, Amazon Bedrock RAG architecture is poised to harness emerging trends, such as integrating multi-modal data sources or utilizing autonomous agents for more complex information retrieval. These enhancements will enable more sophisticated, context-rich responses, broadening the range of applications for RAG across industries.

Amazon Bedrock’s RAG approach represents a major leap forward by integrating retrieval capabilities with generative AI, enabling scalable, accurate, and contextually rich responses. Through managed retrieval pipelines, embedding models, and serverless architecture, RAG ensures real-time relevance and cost efficiency. The legal assistant POC highlights RAG’s potential to streamline tasks and automate research, allowing professionals to focus on strategic priorities. With ongoing developments, RAG is set to redefine information retrieval and AI applications across diverse fields.